Music psychology and big data

Happy New Year, Dear Reader

I hope that you have enjoyed a pleasant holiday season and that 2014 brings you all the happiness, peace and prosperity that your heart desires.

2014 will mark the Chinese New Year of the wooden (or green) horse. It will also be the year that I finally come home to Yorkshire, to my family and friends, and to my fiance (yes, dear reader, he proposed over the holidays!)

A new year brings new challenges. My beloved course on ‘Music and the Brain’ will come to an end here at the Hochschule Luzern, Switzerland and I will move into a period of exciting grant writing with my colleagues in the music performance research group. So many ideas…

In general, our world of music psychology will also face many new challenges that come with the march of advancing technology. One such challenge that you may be familiar with is ‘Big Data’.

In general, our world of music psychology will also face many new challenges that come with the march of advancing technology. One such challenge that you may be familiar with is ‘Big Data’.

Big data refers to data-sets that are just to large to even think about handling by hand or with basic computing tools.

I first heard the term big data thanks to my fiance, who works in internet marketing. His profession has been dealing with big data challenges for a while now, as the internet accumulates more and more information about our habits, and boffins try to work out how to deal with this nebulous mass in order to reveal core patterns.

Big data is also becoming more important for areas of research such as disease prevention, genomics, environmental predictions, as well as the BRAIN initiative, a multi-million dollar project announced by the Obama administration in 2013, with the aim of mapping all the neurons in the human brain. Now that is BIG data!

Big data also has a future for us in music psychology, as I recently learned in a new paper, ‘On the virtuous and the vexatious in an age of big data’ by David Huron (Music Perception)

Huron is probably most well known for his award winning book ‘Sweet Anticipation’ where he discusses musical expectations but he is also an excellent public speaker and often provides stimulating commentary on broad topics of music psychology interest. In this article he takes the reader through the history of big data in music research as well as the possible riches and pitfalls of this new source of evidence about human musical behaviours.

Huron is probably most well known for his award winning book ‘Sweet Anticipation’ where he discusses musical expectations but he is also an excellent public speaker and often provides stimulating commentary on broad topics of music psychology interest. In this article he takes the reader through the history of big data in music research as well as the possible riches and pitfalls of this new source of evidence about human musical behaviours.

Some of the earliest use of computers to examine musical behaviours were in the 1940s and 50s when IBM card sorters were used to examine patterns in Folk music. This research marked the beginnings of computational musicology, the study of musical sound and manuscript on a grand scale using computer algorithms and machine learning techniques.

This field was boosted hugely by the introduction of efficient music scanning technology at the time of the millennium. Then there was the growth of music informatics, which incorporated internet data to the study of people’s musical habits and preferences (More info on this is available at the Centre for Digital Music, UK)

Whether you knew it or not, it seems big data has seen exponential growth in our musical world these last decades! Spend some time playing with themefinder or Echonest to see some of the latest tools that anyone can use.

Big data can teach us about the musical past. It can provide a method for studying historical trends in musical development. For example, see this study by Ani Patel and colleagues on the development of rhythm in European music. It can also help us predict the future. For more info on a new music big data project involving Nokia downloads and predicting the ‘life and death of a track’ then please read this previous blog on the work of Dr Matthew Woolhouse.

Big data can teach us about the musical past. It can provide a method for studying historical trends in musical development. For example, see this study by Ani Patel and colleagues on the development of rhythm in European music. It can also help us predict the future. For more info on a new music big data project involving Nokia downloads and predicting the ‘life and death of a track’ then please read this previous blog on the work of Dr Matthew Woolhouse.

In general big data is important as it allows us to minimize two major problems in psychology, called Type 1 error (where we claim an effect that is not really there) and Type 2 error (where we miss an effect that was there all along, usually because the sample is too small).

In both cases, more evidence minimizes the danger of slipping into error. In this sense Huron calls the collection of big data a ‘moral imperative’ for the science of humanity.

But Huron also points out 4 caveats to this argument for big data:

1) The internet is not a level playing field. Not all cultures and/or languages are represented equally. So as much as the data-sets might be large, we must accept that at present they are also (on a global basis) still biased – not representative of all people.

2) Large data influences statistical significance. The old rule of p = .05 has little meaning in this world. Instead we must focus on effect sizes.

3) You can’t just test and test and test! Re-testing or selective testing of promising data at the expense of the larger data-set inflates the chances of Type 1 error. It’s like cherry picking, in a statistical sense. As such, informal exploratory data pruning must be avoided.

4) We must be clear about predictions that come before and after data were viewed. This is known as a priori and post hoc hypothesizing, respectively. The latter is not considered an independent test of an idea using observations – the core of pure science. You must be careful not to fall into the trap of using data to provide an explanation: data is evidence.

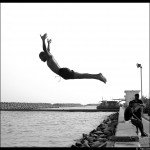

Huron comes from his own viewpoint, having worked with big data-sets for decades. He suggests (and I can confirm from my own experience) that the hardest thing for a young researcher when presented with big data is RESIST THE URGE to dive right in and start swimming around in the notes, numbers or words. The data is a finite resource – you can exhaust it if you don’t go in there with a formal set of hypotheses and a structured plan of analysis.

Huron comes from his own viewpoint, having worked with big data-sets for decades. He suggests (and I can confirm from my own experience) that the hardest thing for a young researcher when presented with big data is RESIST THE URGE to dive right in and start swimming around in the notes, numbers or words. The data is a finite resource – you can exhaust it if you don’t go in there with a formal set of hypotheses and a structured plan of analysis.

Another important idea is to keep records. I have been working with qualitative analysis of large data-sets for the past couple of years and I have come to realize the importance of note-taking. You can easily forget your train of thought to post hoc theorizing. A trail of breadcrumbs in the form of your notes is a good way to keep you in sight of your hypotheses and to understand better at the end how you came to your conclusions.

Huron also suggests that music big data analysis is best done in an idiosyncratic fashion. Meaning that you might pick the work of one composer, period or culture to work on and then aim to compare/test this data with other samples. Thus ensuring each test across data-sets has optimal independence.

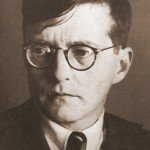

On a sad note, Huron laments the problems of music access. Public domain access to top-quality critical editions in music is limited and unlikely to be opened any time soon. The example given in the paper is that of Shostakovich. Under current laws most scholars simply cannot afford a copy of his complete works. Copyright is a thorny issue, fought hard on both sides, but it limits music big data in a way that will stall our study of modern composers for a generation.

On a sad note, Huron laments the problems of music access. Public domain access to top-quality critical editions in music is limited and unlikely to be opened any time soon. The example given in the paper is that of Shostakovich. Under current laws most scholars simply cannot afford a copy of his complete works. Copyright is a thorny issue, fought hard on both sides, but it limits music big data in a way that will stall our study of modern composers for a generation.

Huron speculates that it may take about 50 years to reach 90% availability for non-commercial and commercial music archives to become available online. Digitization of score marches on, hampered by limited budgets but boosted by hard working, driven musicologists and archivists.

In another way, academia is sadly falling behind commercial industries who already have vast access to people’s music listening habits thanks to hidden music tracking software on our personal computers – a use of technology that would not likely pass any psychology ethics review board at present.

Despite the pitfalls and dangers however, there is huge promise in the power of big data for music psychology. Our research resources for musical understanding are increasing by the second in the digitized age and these new methods and techniques, hand in hand with classical empirical lessons and warnings, can bring a new light on age-old questions regarding our music creation and listening behaviours.

2 Comments

Buck

Firstly, congratulations!

Secondly, I look forward to reading of your use of Big Data in music research. The problem of uncontrolled samples is very real, and requires careful consideration when trying to draw conclusions. But what a fascinating approach!

Pingback: