SMPC Day 2: Session 1 (Parallels and Impairments)

Day 2 of the conference began with overcast skies but a very enjoyable trip to the local coffee shop. I like the fact that you can actually get a small coffee here in Canada – ask for a small coffee in the UK (other than espresso) and you get a substantial mug of coffee which for me is a waste as I don’t want much. So, fueled with my delightful mini kick I headed to the 8.40am start.

Day 2 of the conference began with overcast skies but a very enjoyable trip to the local coffee shop. I like the fact that you can actually get a small coffee here in Canada – ask for a small coffee in the UK (other than espresso) and you get a substantial mug of coffee which for me is a waste as I don’t want much. So, fueled with my delightful mini kick I headed to the 8.40am start.

Today I planned to duck between sessions; I started in ‘Parallels and Improvements’ and a talk by Bob Slevc (University of Maryland). Bob has been working on a follow-up to his study of 2009 where he found interactions between the processing of in-harmonic chord sequences and so called ‘garden path sentences’, where the participant is presented with a word, mid-sentence, that is syntactically incongruous. If both a chord sequence and a concurrently presented sentence contain a syntactic incongruity then you get a slowing in reading time.

This research supports Ani Patel’s Shared Syntactic Resource Integration Hypothesis (SSIRH). SSIRH argues for a sharing of resources in the brain that are used to integrate structure in music and language, online. However, recent research found a similar interaction effect with semantic garden path sentences, where meaning instead of syntax was manipulated in sentences. This result suggests it is more than just syntax integration that is shared between language and music.

![]() Bob hypothesized that maybe cognitive control is the key to the solution. Perhaps a system that detects and resolves underlying representational conflict is what is being shared, as opposed to just syntax? He presented new research using the classic Stroop paradigm alongside his classic harmonic sequences; and he replicated the interaction he has found in the past with garden path sentences.

Bob hypothesized that maybe cognitive control is the key to the solution. Perhaps a system that detects and resolves underlying representational conflict is what is being shared, as opposed to just syntax? He presented new research using the classic Stroop paradigm alongside his classic harmonic sequences; and he replicated the interaction he has found in the past with garden path sentences.

This all means that the SSIRH is being updated to reflect the latest data, in that there is more to resource sharing in music and language than just syntax. The key now will be to narrow down the processes involved and define what is it about ‘cognitive control’ that is shared.

After this talk I took a break to collect my thoughts. Bob’s work is very central to my research ideas so I needed the time to process the new information. After this break I moved into the session on ‘Hearing Impairments’.

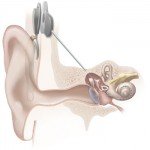

The first talk I heard was by Arla Good (Ryerson University, Canada) who has conducted a longitudinal study on the impacts of musical training on music perception, and emotional speech perception and production, in children with cochlear implants (CI).

Previous research has found that CI children perform worse on the subtests of the Montreal Battey for the Evaluation of Amusia (MBEA). However, research in adults with CI has found that musical training can help with music perception. Arla asked two questions: 1) can we also see improvements in children and 2) do any improvements in music perception transfer to the processing and production of emotional speech?

Previous research has found that CI children perform worse on the subtests of the Montreal Battey for the Evaluation of Amusia (MBEA). However, research in adults with CI has found that musical training can help with music perception. Arla asked two questions: 1) can we also see improvements in children and 2) do any improvements in music perception transfer to the processing and production of emotional speech?

She tested 18 children in total, 9 who were randomly assigned to music lessons (20% vocal training and the rest keyboard) and 9 were assigned to art lessons. After 6 months of lessons the music CI children showed significant improvements in their overall MBEA scores whereas the art CI lesson kids did not. This difference was driven largely by their performance on the memory test.

The music CI kids were also better on audio only trials of emotional prosody perception, though to date there are no significant findings in the production tasks.

Overall, this data shows great promise in terms of helping CI children to improve crucial social aspects of speech perception through musical training.

The final talk I saw before coffee was by Martin Kirchberger (ETH Zuerich, Switzerland) who has developed a series of tests for assessing music perception in hearing impaired listeners. He discussed how the hearing aids that people buy to help with everyday life, in particular with understanding speech, often show minimal improvements in music hearing.

The final talk I saw before coffee was by Martin Kirchberger (ETH Zuerich, Switzerland) who has developed a series of tests for assessing music perception in hearing impaired listeners. He discussed how the hearing aids that people buy to help with everyday life, in particular with understanding speech, often show minimal improvements in music hearing.

Kirchberger’s eventual aim is to provide recommendations on how hearing aids can be improved to help music listening.

To date he has worked on his 10 test hearing battery in order to demonstrate how music listening is currently affected by hearing aids. Alarmingly, he showed that the use of hearing aids did not significantly improve any of the different areas of music perception (e.g. meter, melody, harmony, timbre) and in fact there were two areas (level and attack detection) where the hearing aids made perception worse.

The message was clear; that there needs to be more attention paid to how our modern hearing aids, that have done so much to aid with speech perception, adapt to the challenge of music perception. It is great to see that good data is being gathered to hopefully drive more research in this area.

OK dear reader, I am writing this summary for you while the other delegates are in the other room enjoying their morning coffee break. I think I will rush off to say hello (and perhaps nibble on a doughnut – there are lots here!) before heading to the next session…